Vivid Skin – Graduate Project conclusion

Although the deadline for my graduate project has come to an end, it is not the end of Vivid Skin. As mentioned all along, Vivid Skin is a project that can be continually added to and adapted to improve and widen the scope.

The initial designs for this project and the final outcome do not differ too much. I wanted to create an immersive music visualisation experience and that is what I have achieved. When I first imagined making the project, I thought that getting short-throw projectors and making a solid cylinder would be a lot easier than it was, meaning I had to make changes along the way – firstly by making the cylinder out of fabric, and projecting from the outside rather than inside. If I were to have a much higher budget, then I would have used ultra-short throw projectors, and made a solid, free-standing cylinder. Through having to adapt, it made me learn a lot more about both fabrics, projectors and computers.

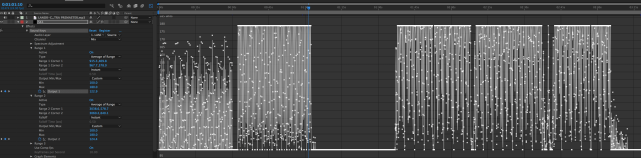

One of the main ideas that I did not fulfil was the challenge of making the music binaural. I initially wanted to do this so it would be more immersive for the audience, however after starting the creation of the visuals I realised that this would not be as effective as I first thought. I would have liked to have been able to make the audio binaural, and for the visuals to react to this, but this would have been a lot more complicated and needed the use of an engine such as Unity to create. I used After Effects and this type of complexity cannot be achieved in this software, therefore it would not have been effective to make the audio binaural because the visuals would not have been reactive to movement.

I have taught myself the skills I have needed in both After Effects and projection hardware and software over the course of this project, and I have also learnt a lot more about computers due to the limitations and issues that I have come across throughout the journey. I now know much more about PC’s and the capabilities they have when it comes to multi-display outputs, and I also know a lot more about cabling and the hardware needed for running multiple projectors.

If I were to start the project again now and make any changes, it would be to make sure I had a room to myself that I did not need to take down my equipment each time, which would therefore give me more time for rehearsing and creation of visuals.

I am very happy with how the project has turned out, although there is still room for improvement before the exhibition. Within the exhibition space, I will make the room darker so the visuals appear clearer, and I will also have much more space around the cylinder than I have had in the Colab room, so I will be able to spread my projectors out more evenly around the space so I can get it looking completely seamless.

I will be blogging the next stage of the project as well which is the creation of my trailer, poster and business cards. I look forward to displaying my work at the exhibition and I also look forward to hearing feedback and reactions from visitors.